Natural language processing

What motivates us

Natural language processing (NLP) is at the epicenter of many recent advances in AI, including large language models such as GPT-3 and BERT. But while these methods work amazingly well for analyzing general-purpose text, they are substantially less reliable when they need to analyze the more specialized and lower-volume text present for many specialized domains within Bosch.

Our approach

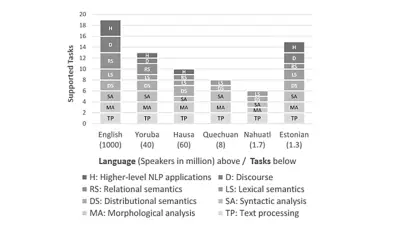

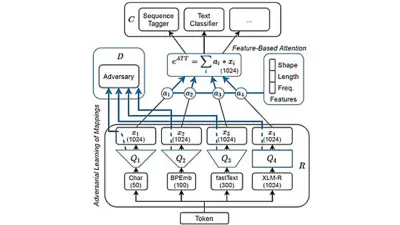

For scalable NLP in Bosch’s heterogenous industrial context, a key challenge we address with our research is NLP in low-resource settings, i.e., settings where only little (annotated) data is available. For this, we work on topics, such as robust input representations, transfer learning, custom deep learning models, and the creation of custom training and evaluation data.

Application

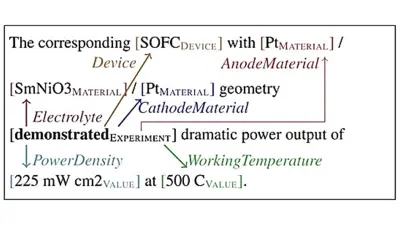

A lot of knowledge is hidden in large amounts of unstructured data available in various forms (e.g., scientific publications, web content, corporate documents, etc.). Thus, NLP is one of the fundamental technologies to enable the intelligence of various Bosch products and services, especially in terms of converting text data into knowledge, retrieving knowledge for users’ needs, and, in general, going the path from text to knowledge to value. Our techniques are useful to business units with a need to directly parse or understand large amounts of text data, or settings where one needs to understand semantic structure and meaning about the objects underlying text descriptions. Two examples are the materials science and automotive domains.